As cyber risks now get discussed at all levels, there is a need for businesses to understand the scale of cyber threats and the performance of their security operations functions. This is much like any other strand of the organisational activity. Sales has its sales figures and growth; HR has its churn rates and numbers of vacancies; and Quality Assurance has its failure or return rates. Cyber security metrics are also important.

Cyber security metrics – The poor choices

One challenge in cyber security though is that in most cases the ultimate aim is to try and stop things happening. Whether that be data breaches, corruption, insider threats or any other type of incident.

The security controls you put in place to protect systems aim to reduce the prevalence of the figures upon which you need to report. You can report the detected/prevented cases, but often those figures bear no relation to the size of the risk either.

Similarly, if you improve the prevention and detection of a particular family of cyber-attacks (through an investment in some piece of technology, for example), you can go from having no detected cases to dozens of detected cases – and the question then might be why has this problem got worse when you just spent money buying something to stop it. In this situation, ignorance is definitely not bliss – as regulations confirm.

These are, as is probably evident, some very inaccurate security metrics in use. The goal is to find reliable measures that show the actual functioning of the control systems and security processes; then find ways to collect and report on the data that corresponds with those measures.

The problem is that choosing those metrics can be difficult, and even influenced, by factors that aren’t in tune with the goals of the security function or the business.

Cyber security metrics and data in dashboards

Everyone loves a cyber security dashboard; a single view that contains the most important factors at a glance. The art of dashboards is a chaotic one though – one security manager reported he had a dashboard than had 35 separate key indicators on it, and it was largely useless as so much data was condensed into too small a space that spotting metrics that should have been concerning was all but impossible.

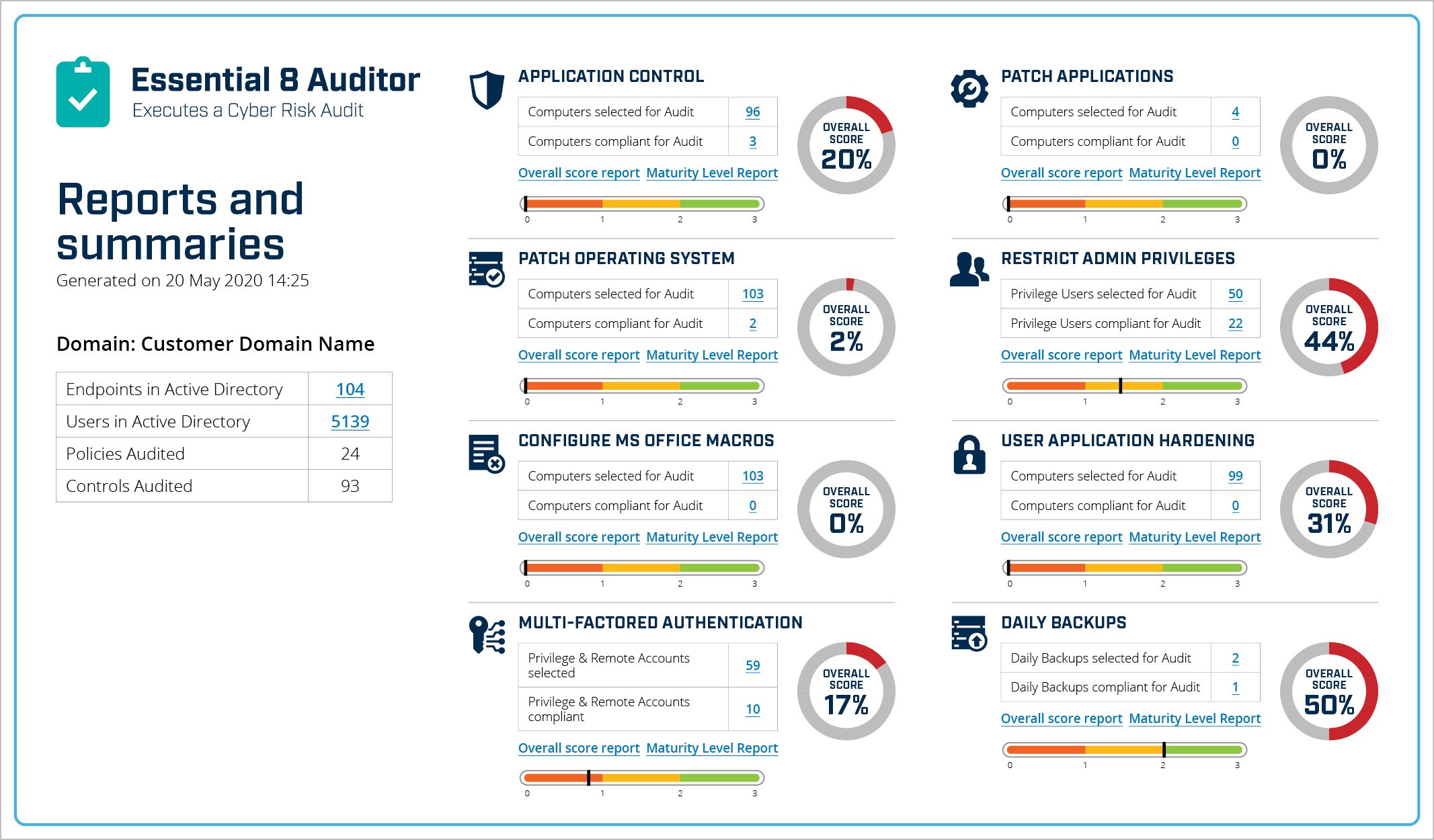

Discover the Essential 8 Auditor – systematitic measurement of security controls

The cyber security industry has responded to this demand for dashboards for CISOs with exactly the response you would expect – every vendor of products or services creates a dashboard (or several) and calls it the “CISO dashboard” or similar. However, in the vast majority of cases these dashboards don’t contains the metrics the CISO wants to see, they contain the metrics the vendor wants to show them OR (more worryingly) just the metrics the vendor is able to present.

So, an anti-virus CISO dashboard will contain malware detections, quarantines, disinfections, perhaps a list of top ten types that have been identified. An IDAM CISO dashboard will present user and group access information, pending changes, levels of access and dormant accounts. They are both “the CISO dashboard” but there’s no overlap, and the CISO can’t look at two dashboards – imagine trying to drive a car like that.

What you have is a case of vendor narcissism, each vendor of each solution being adamant that the metrics they generate are the metrics the CISO needs to see; so these metrics are what they put on their CISO dashboard. But this production driven approach, rather than fulfilling the needs of the recipient, creates a selection bias of a very definite kind.

Samples for cyber security assessment

The other way metrics suffer from selection bias is in choosing what the samples or data sets include based on what you want the answer to look like. If for example, you have good solid controls in place for network intrusion detection and content checking, then the dashboard might lean towards reporting on these efforts simply because they are controlled and have telemetry available. You don’t want incomplete, out-of-date or unflattering data being given a higher prominence than accurate, current outputs.

Or perhaps you have a patching solution that applies, monitors and reports on the systems it covers and gives clear stats. That gives you a good (=favourable) output, but it will omit the systems that are legacy that you know can’t be patched and the development networks that are often excluded or handled manually and so left in a less well patched, and less well measured state.

In some cases, you might find controls or systems are deliberately chosen for reporting purposes because they are known to be well managed, or conversely because they are known to contain exceptions. This will depend on the point you are trying to make or story you wish the report metrics to tell – success or failure.

Once again, selection bias is affecting the results and report that get presented.

Time pressures

The next area where metric selection can be distorted is in the ease of collecting the input data. Remembering that security operations teams are often under resourced and/or overworked, it is not surprising that when asked to report on a security control, or a set of safeguards, they are likely to draw data from the easiest sources.

This might mean that you get clear and accurate data for systems in the main office location, but less reliable metrics from outlying satellite offices, or that you find controls or systems that allow the easy generation and export of outputs get covered well, whereas systems that don’t make outputs quite as easy to obtain get lower prominence.

Here, selection bias is a simple case of “the path of least resistance”. If people are choosing samples, or controls, they are likely to choose ones that they know they can get useful data from most easily; rather than perhaps those that are most representative or worrisome.

Cyber security measurements that affect the results

The last example of where security metrics can be misleading is when the presence of a control or detection capability generates outputs, but itself affects those outputs.

So if you have a IT network or perimeter that is thought to be relatively resistant to attack and add an IDS solution to it, you may find that having spent a sum of money on a new piece of security monitoring technology to improve cyber resilience, you are actually seeing a large rise in the numbers of alerts because of it.

Similarly, your “onion skin” approach might mean lots of “detected threats” at the outer layers lead to a very small number of incidents occurring deeper inside, and thus almost undermine the business case for the more internal controls that are seldom the point of interception.

So, if you are reporting on anti-virus defences, do you choose the outer defences that have high numbers of infections detected for your metrics, or the inner controls that scarcely ever sound the alarm?

Avoiding cyber security audit selection bias

Huntsman Security is a vendor, whose customers are security operations teams; no one is immune to the sorts of challenges discussed in this blog – defining and reporting on cyber security performance metrics.

In Huntsman Security’s case, with its Auditor and Scorecard solutions, we didn’t try and massage, abridge and translate the outputs from our Security Analytics technologies into a CISO dashboard; instead we followed the government standards and guidance in the Essential 8 Framework and created solutions that track and report on control metrics based on that risk-based selection criteria – the security controls that are involved in 85% of security breaches.

About Huntsman

About Huntsman